Battle of tools

We are living in an era where computing moved from mainframes to personal computers to cloud. And while it happened, we started generating humongous amount of data. At the same time, the multi-folds increase in computing power also brought in advancement in application of algorithms which can be used to get insights from huge amount of data being generated. The future of decision making will greatly rely on data, and no industry will remain untouched by this development. Data, however, has its own set of issues and challenges; for the data available to be meaningful and concise, one needs to organize it efficiently. Though technology plays and important role in developing working solution, the foundation for building a robust analytical solution relies heavily on the clarity in fundamental concepts of data science as well as understanding business and domain related issues. This essence of the above statement has aptly been conveyed by Prof. Dinesh Kumar who has been featured several times as amongst the best and prominent analytics academicians in India:

“Focus and understand statistical learning, machine learning and artificial intelligence concepts. Many spend too much time on technologies such as R, Python, Hadoop and so on. The technologies are important, but you cannot become a successful data scientist if your conceptual knowledge is weak.” - Dinesh Kumar U

Technology is an evolving field and has eased the life of several data scientists while they try to convert the conceptual knowledge into a working solution. Not undermining the role of technology, we all know that there are several licensed and open source tools for data science. People working in the field of data science would have heard about tools such as SPSS, Stata, Python, SAS, R, RapidMiner, KNIME, Minitab etc.; if not used all of them. And when there are so many tools in the market and all competing to prove their usefulness in the field of data science, it becomes an enduring question “Which is the best tool for a data science?” Off late, we have seen the surge in usage of open source tools like Python and R. It may not be an over statement to quote that “most of the professionals working in the field of data science either use Python or R” However the question still remains “which is best Python or R?” We come across questions by several students, corporate professionals and data science enthusiast:

- “I want to make a career in data science. Which tool should I focus on?”

- “Which tool should I use for data analysis, Python or R?

- “I have heard that python is a sought after language for building a career in data science. Many companies look for professionals with python skills. Is it true?

- “Can you suggest, if I should learn Python or R for doing data analysis?”

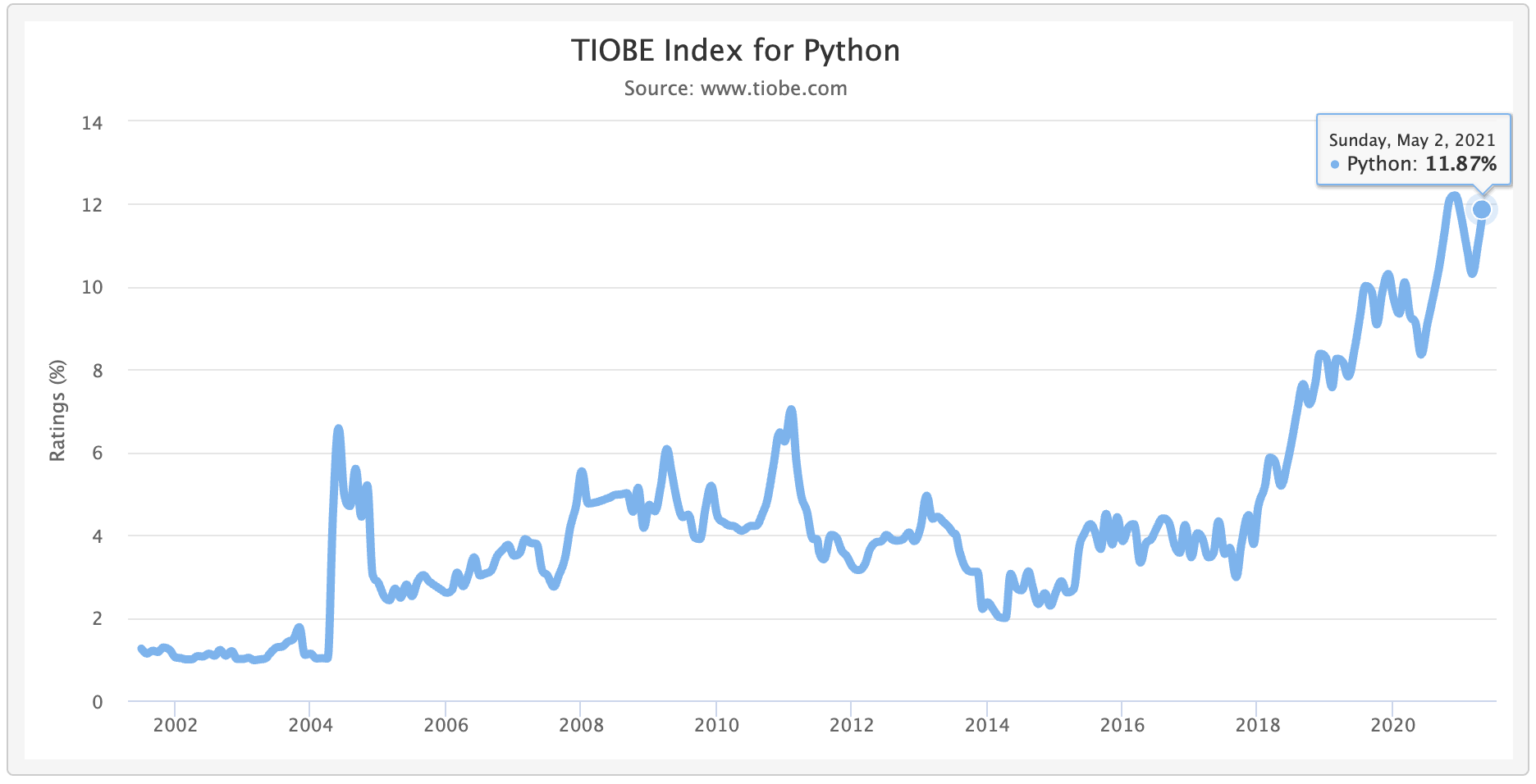

The queries are never ending. While we may not have one right answer to the questions like above, but the users of Python or R vouch for the supremacy of one over the other. The debate takes an interesting turn if one looks at the TIOBE index which measures the popularity of programming languages. We will look at the genesis of Python and R than get into the comparison between them.

Comparing Python and R

Python is an interpreted high-level programming language for general-purpose programming. Created by Guido van Rossum at Centrum Wiskunde & Informatica (CWI) in the Netherlands and first released in 1991, Python has a design philosophy that emphasizes code readability, notably using significant whitespace. It provides constructs that enable clear programming on both small and large scales.

R is an implementation of the S programming language combined with lexical scoping semantics inspired by Scheme (S language). S was created by John Chambers in 1976, while at Bell Labs. R was created by Ross Ihaka and Robert Gentleman at the University of Auckland, New Zealand, and is currently developed by the R Development Core Team, of which Chambers is a member. The project was conceived in 1992, with an initial version released in 1995 and a stable beta version in 2000. Let’s look at the comparison based on technological proficiency.

Technological Proficiency

TIOBE programming community index is a measure of popularity of programming languages, created and maintained by the TIOBE Company based in Eindhoven, the Netherlands. TIOBE stands for “The Importance of Being Earnest” which is taken from the name of a comedy play written by Oscar Wilde at the end of the nineteenth century. The index is calculated from the number of search engine results for queries containing the name of the language. The tiobe index for May 2021 is:

| May 2021 | May2020 | Programming Language | Ratings | Change |

|---|---|---|---|---|

| 1 | 1 | C | 13.38% | -3.62% |

| 2 | 3 | Python | 11.87% | +2.75% |

| 3 | 2 | Java | 11.74% | -4.54% |

| 4 | 4 | C++ | 7.81% | +1.69% |

| 5 | 5 | C# | 4.41% | +0.12% |

| 6 | 6 | Visual Basic | 4.02% | -0.16% |

| 7 | 7 | JavaScript | 2.45% | -0.23% |

| 8 | 14 | Assembly language | 2.43% | +1.31% |

| 9 | 8 | PHP | 1.86% | -0.63% |

| 10 | 9 | SQL | 1.71% | -0.38% |

| 11 | 15 | Ruby | 1.50% | +0.48% |

| 12 | 17 | Classic Visual Basic Pascal | 1.41% | +0.53% |

| 13 | 10 | R | 1.38% | -0.46% |

| 14 | 38 | Groovy | 1.25% | +0.96% |

| 15 | 13 | Matlab | 1.23% | +0.06% |

| 16 | 12 | Go | 1.22 | -0.05% |

| 17 | 23 | Delphi/Object Pascal | 1.21% | +0.60% |

| 18 | 11 | Swift | 1.14% | -0.65% |

| 19 | 18 | Perl Basic | 1.04% | +0.16% |

| 20 | 34 | Fortran | 0.83% | +0.51% |

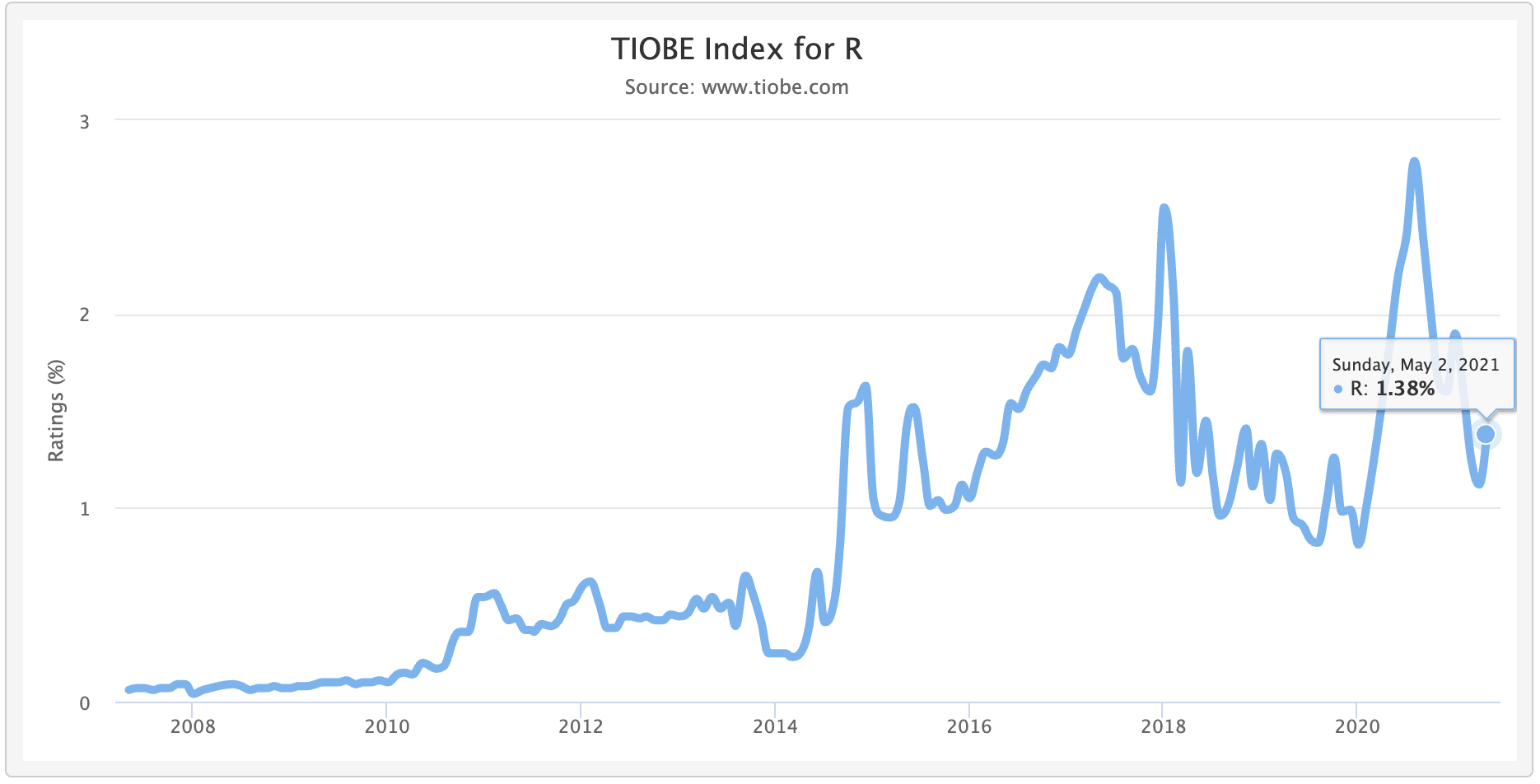

Python as a language of choice sits at 2nd position compared to 13th position of R as a language. Popularity of MATLAB as a tool for numerical analysis has picked up over the years and stands at fifteenth position as on May 2021. However the usage of MATLAB is in many other fields and contributes to its popularity. However, we will narrow the scope of comparison between Python and R. Let us look at the popularity of Python and R over the years as well:

The TIOBE Index and the trend from 2001 onwards, may make the python community ecstatic. At the same time, it may feel like a doomsday for R followers. However, let us read the above TIOBE Index with a more pragamtic approach.

- According to the site, TIOBE index is “not about the best programming language or the language in which most lines of code have been written”.

- The index covers searches in Google, Google Blogs, MSN, Yahoo!, Baidu, Wikipedia and YouTube. The index is updated once a month.

- The site does claim that the number of web pages may reflect the number of skilled engineers, courses and jobs worldwide.

The above points summarize and give useful deductions and may suggests that popularity of python as a language is for varied reasons. Python is a developers language for general purpose programming. It started as a successor of Perl to write, build scripts and all kind of glue software. But gradually it entered other domains as well. Nowadays it is quite common to have Python running in large embedded systems. So it is very likely that Python will enter the top 3 and even might become the new number 1 in the long run. R is a programming language and free software environment for statistical computing and graphics that is supported by the R Foundation for Statistical Computing. The R language is widely used among statisticians and data miners for developing statistical software and data analysis. Both Python and R evolved during 1990s. Python has its root in Neatherlands while R finds its gensis in New Zealand. Apart from having origins in different geographies, the background and the purpose for which these software evolved seems to be completely different as well. Python is more robust and general purpose programming language where data analysis becomes a drop in the ocean for the bigger things python has been envisioned to perform. Whereas R software, seems to be a language built for statisticians and to provide them an environment for statistical computing and visulaization. TIOBE index claims that the popularity is reflected by the “number of searches” on the search engine, will definetely put Python well above R owing to upteem number of applications of python. The claim that search also reflects number of engineers or jobs for Python language also seems correct for the same reason. This also seems to the reason as to why Python has a bigger community support (in general) and why there are several useful packages which are available for data science application. In all, there are more than 1 lac packages which are available in python. Undoubtdely, Python is ubiquitous and has climbed the ladder to be amongst the most popular languages in a very short duration. But is it a worrying trend for R users. Afterall, scarcity of engineers in R may also suggest that R will be treated as a niche technology for statistical computing in corporate and academic institutions alike. As far as community support and addition of new and useful packages are concerned, R may be lagging but not far behind. R has more than 12 thousand packages for data science hosted on CRAN which is not a small number keeping the perspective that it is a software for statistical computing. It will be intresting to know the actual number of python packages for Data Science alone. The news that Microsoft R Open is the enhanced distribution of R from Microsoft Corporation is a shot in the arm and suggests that corporate giants like microsoft have something in mind to grow the user base for R community.

Keeping aside the popularity in terms of TIOBE index, community support etc, can we compare the usage of Python and R in perspective of Data Science application.

Application Proficiency

We decided to take up comparative study of the two prominent tools for data analysis i.e. Python and R. While comparing the tools to answer “which is better, Python or R”, we will broaden the scope of the question to ask, which tool is better to perform:

- Statistical Learning

- Machine Learning

- Deep Learning

- Text Analytics

- Model deployment

Though, one of the important aspect for data analysis is data pre-processing and data visualization, we will take this aspect of comparing Python and R software at a later point. The focus in this article will be to compare the softwares from statistical learining aspect. In series of articles to follow, we will compare them from Machine learning, Deep learning and other aspects as well.

Statistical Learning

The advancement in infrastructure and technological computing has led to application of models in solving varied use cases of business importance. However, apart from achieving the highest possible accuracy in prediction, which machine learning models are tuned to achieve, it may be desirable for certain businesses to draw inference from the model and from the underlying data used for developing the models. There are many models which can be used for inference based modelling. The simplest and most commonly used models in this area are multiple linear regression and logistic regression. Let us take up multiple regression as a technique to understand the sold price of a player in Indian Premier League which is a popular tournament in a T20 game of cricket. The performance of the players, which may finally decide the sold price of the player, could be measured through several metrics. Notably, although the IPL followed the Twenty20 format of the game, it was possible that the performance of the players in the other formats of the game such as Test and One-Day matches could influence player pricing. A few players had excellent records in Test matches, but their records in Twenty20 matches were not very impressive. The objective for doing modeling using Multiple Linear Regression technique could be:

- Estimate the average sold price (dependent variable) of the player given the performance metric (independent variables) of the player.

- Understand the performace metric which is a statistically significant variable in estimating the average sold price.

There are many issue with model building as well as there are certain set of assumptions which needs to be staisfied before a valid model can be attained. However, one of the issues which need to be sorted out is the variable selection issue. In other way, which variables will be finally be retained by the model which is built. There are many stratgies which can be used for variable selection:

- Forward selection

- Backward elimination

- Forward selection and backward elimiation

The above strategies rely on performing partial F test and finally only the variables which are statistically significant are retained in the model. The other strategy which can be used for variable selection is to use a metric Akakike Information Criteria (AIC) or Bayesian Information Criteria (BIC).

Statistical Learning - Python

Let us look at some of the popular packages which are available in Python for building a statistical learning model:

statsmodel.api,statsmodel.formula.apiscipy.stats

We have not mentioned sklearn as it is primarilty for building machine learning model than for statistical learning model.

Statistical Learning - R

Some of the popular packages for building statistical learning model in R:

stats,MASScaretmixlm

caret is one of the useful wrapper package in R which gives the felxibility of making a statistical as well as machine learning model.

Comparision based on Statistical learning

We are not demonstrating model building using R or Python. However, if one needs to build a full model using all the performance metrics (variables) for understanding the sold price of a player in IPL as well as to understand the statistically significant variable, the packages mentioned above in Python and R can be used to develop such a model.

In Python, using statsmodel.formula.api:

> regressor_OLS = smf.ols(formula='Y_variable ~ X_variable', data=df).fit()

In R, using stats:

> regressor_OLS = lm(formula='Y_variable ~ X_variable', data=df)

The only issue with the above approach to build a regression model; it will have significant and insignificant variable as a part of the final model.

Are there some packages which can apply the strategy for variable selection discussed in above section? It seems there is no way to apply the partial F test strategy, AIC or BIC for building a step wise model in Python. The only way to achieve, it will be to write a custom function which removed statistically insignificant variables. This holds true for multiple linear regression as well as for logistic regression.

However, there are inbuilt functions step() as a part of the stats package and stepAIC() as a part of the MASS package which can help in implementing AIC as a criteria for variable selection:

> step(object, scope, scale = 0, direction = c("both", "backward", "forward"), trace = 1, keep = NULL, steps = 1000, k = 2, ...)

> stepAIC(object, scope, scale = 0, direction = c("both", "backward", "forward"), trace = 1, keep = NULL, steps = 1000, use.start = FALSE,

k = 2, ...)

In case, one wants to implement partial F test for feature selection, stepWise() function from mixlm provides an option to do so:

> forward(model, alpha = 0.2, full = FALSE, force.in)

> backward(model, alpha = 0.2, full = FALSE, hierarchy = TRUE, force.in)

> stepWise(model, alpha.enter = 0.15, alpha.remove = 0.15, full = FALSE)

> stepWiseBack(model, alpha.remove = 0.15, alpha.enter = 0.15, full = FALSE)

Each of the above functions expects a model object to be passed on which the variable selection strategy can be applied. Based on the objective set forth for making a regression model for the IPL case, one can infer that the R provides various model selection stratgies whereas in order to achieve similar outcome through Python, one may have to write a custom function. We may not have provided an exhaustive list of packages which can help achieve this objective in R or in Python. However, R seems to have an edge as far as implementing the statistical concepts and building an inferential model is concerned.

In the next article, we will take up a detailed comparision of building machine learning models in Python and R.